1. Introduction

In this tutorial, you will learn how to Dockerize a Node.js application—a process that packages your app and all its dependencies into a portable Docker container. By doing so, you can run your application consistently across any environment, from local development to production.

n simple words, the process of running the application source code in a container is containerization. Following are the steps to containerize the application:

- Create your application – source code and dependencies.

- Create a Dockerfile which describes your application, dependencies and how to run it.

- Create a Docker image using Dockerfile using

docker buildcommand. - Push the image to the registry. This step is optional.

- Run a container using the image.

Dockerfile describes an application and tells Docker how to build an image. A Dockerfile is a very important file and should be treated like a normal source code. You should keep it in a version control system.

FROM, COPY, RUN create a new layer. WORKDIR, EXPOSE, CMD add the metadata and do not create a new layer. The fundamental idea is this — when an instruction adds content like files or programs, it generates a new layer. If the instruction provides guidelines for building the image or running the container, it adds metadata instead.

2. Create and run an image for application

Creating a simple Node.js application and a corresponding Dockerfile is straightforward. Below are the steps to set up a basic Node.js app, including the necessary Dockerfile for containerization.

Step 1: Create the Node.js Application

- Create a project directory:

mkdir simple-node-app cd simple-node-app

2. Initialize a new Node.js project:

npm init -y

3. Install Express (a web framework for Node.js):

npm install express

4. Create an app.js file: Create a file named app.js in the project directory with the following content:

const express = require('express');

const app = express();

const PORT = process.env.PORT || 3000;

app.get('/', (req, res) => {

res.send('Hello, World!');

});

app.listen(PORT, () => {

console.log(`Server is running on port ${PORT}`);

});

Step 2: Create a Dockerfile

A Dockerfile is a text file containing a set of instructions that Docker uses to build a container image. It describes the steps needed to assemble the environment and software dependencies required to run an application inside a Docker container. Docker reads this file, follows the instructions, and creates a container image, which can then be deployed in any environment that supports Docker.

Create a file named Dockerfile in the project directory:

# Use the official Node.js image FROM node:14 # Set the working directory WORKDIR /usr/src/app # Copy package.json and package-lock.json COPY package*.json ./ # Install dependencies RUN npm install # Copy the rest of the application code COPY . . # Expose the port the app runs on EXPOSE 3000 # Command to run the app CMD ["node", "app.js"]

Here are some common instructions found in a Dockerfile:

- FROM: Specifies the base image to start with. For example,

FROM node:14means the image will be based on node. - RUN: Executes a command in the shell inside the image. It is often used to install dependencies. For example,

RUN npm installinstalls dependencies in the image. - COPY or ADD: Copies files from your local filesystem into the container.

- WORKDIR: Sets the working directory inside the container.

- CMD: Specifies the default command to run when the container starts. For example,

CMD ["node", "app.js"]will runapp.jsscript by default.

Step 3: Create a .dockerignore File

It’s a good practice to create a .dockerignore file to prevent unnecessary files from being copied into the Docker image. Create a file named .dockerignore and add the following content:

node_modules npm-debug.log

Step 4: Build the Docker Image

Use the docker build command to create a new image using the instructions in the Dockerfile. Now that you have your application and Dockerfile set up, you can build your Docker image.

docker build -t simple-node-app .

Build the Docker image:

docker build -t simple-node-app .

Step 5: Run the Docker Container

Run the Docker container:

docker run -p 3000:3000 simple-node-app

Step 6: Access the Application

Open your browser and go to https://localhost:3000. You should see “Hello, World!” displayed.

3. Pushing images to registry

Like you push your code to Github, you can push your image to registry. The common public image registry is Docker Hub. You need a Docker Hib ID to push an image to the registry.

We have an image simple-node-app with tag v3. We’ll push this to our repository learnitweb.

>docker tag simple-node-app:v3 simple-node-app:learnitweb/simple-node-app:v3 >docker push learnitweb/simple-node-app:v3 The push refers to repository [docker.io/learnitweb/simple-node-app] f624836a3b17: Pushed 1697423e73b6: Pushed 89b22097ce12: Pushed a2361fe84c63: Pushed 0d5f5a015e5d: Mounted from library/node 3c777d951de2: Mounted from library/node f8a91dd5fc84: Mounted from library/node cb81227abde5: Mounted from library/node e01a454893a9: Mounted from library/node c45660adde37: Mounted from library/node fe0fb3ab4a0f: Mounted from library/node f1186e5061f2: Mounted from library/node b2dba7477754: Mounted from library/node v3: digest: sha256:55a2da454c74b1deba95b9b07c3a4a2abba8611fd1763b6464d33c3e2f9060e4 size: 3049

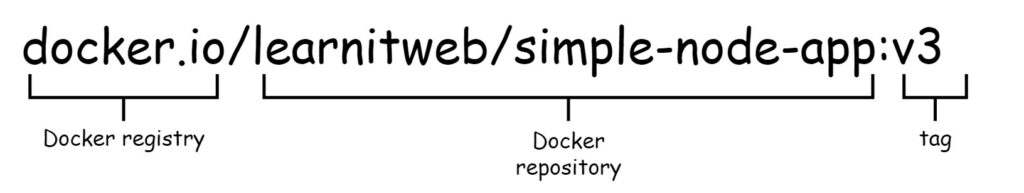

Before pushing images, it’s essential to tag them properly. This is because the tag contains key registry-related details such as:

- The registry’s DNS name

- The repository name

- The specific tag for the image

Refer the following figure:

4. Multi stage build

A multi-stage build in Docker is a feature that allows you to use multiple FROM statements in your Dockerfile to create intermediate images during the build process. This method is useful for reducing the final image size and separating the build and runtime environments.

In a multi-stage build, the first (and possibly multiple) stages can include everything needed to compile or build the application (like compilers, libraries, etc.), while the final stage contains only the necessary artifacts to run the application, keeping it lightweight.

4.1 Why Use Multi-Stage Builds?

- Smaller Image Sizes: Only the required components are included in the final image.

- Security: You can exclude build-time dependencies like compilers, reducing the attack surface.

- Efficiency: By splitting the build process into stages, you optimize resource use, preventing unnecessary files and software from being included in the final image.

# First stage: Build the application FROM golang:1.18 as builder WORKDIR /app COPY . . RUN go build -o myapp # Second stage: Create a lightweight image to run the app FROM alpine:latest WORKDIR /app COPY --from=builder /app/myapp . CMD ["./myapp"]

Breakdown

Stage 1 (builder):

- A Go image is used to compile a Go application.

- The

WORKDIRis set to/app, and the source code is copied into this directory. - The application is built using go build, creating an executable named

myapp.

Stage 2 (alpine):

- A minimal Alpine Linux image is used as the runtime environment.

- The built executable

myappis copied from the previous build stage (builder) usingCOPY --from=builder. - The final image contains only the binary needed to run the app, without the Go compiler and other build tools.

Benefits:

- The final Docker image is much smaller since it only contains the application binary and minimal dependencies, without all the build tools.

- This method allows you to use larger, more feature-rich images during the build phase without affecting the size or complexity of the final production image.

Key Points:

- FROM: Each FROM command starts a new stage.

- COPY –from=<stage>: Used to copy artifacts from one stage to another.

- You can name stages (like builder in the example) for easy reference.

- Multi-stage builds are particularly beneficial when working with languages that require large build environments, such as Go, Java, or Node.js.

5. Build cache

In Docker, build caching is a mechanism that reuses the results of previous builds to speed up the image-building process. Docker caches the intermediate layers that are created during the image build process, and if nothing has changed in a layer, Docker will reuse the cached version instead of rebuilding it from scratch.

5.1 How Docker Builds Images?

- Dockerfile Instructions: Each instruction (e.g.,

FROM,COPY,RUN) in a Dockerfile creates an image layer. - Layers: Docker builds the image layer by layer, with each instruction adding a new layer on top of the previous one.

- Caching: If Docker detects that an instruction and its context (e.g., files copied, commands run) have not changed since the last build, it will use the cached version of that layer instead of executing the instruction again.

Steps of the Docker Build Process:

- Read Dockerfile: Docker reads the Dockerfile to understand the steps required to build the image.

- Process Each Instruction:

– FROM: Docker checks for an existing cached image of the base image.

– COPY/ADD: Docker checks if the files being copied into the image have changed. If they haven’t, Docker uses the cached layer.

– RUN: If the command to be executed has not changed, Docker uses the cached result of the previous execution.

– CMD/ENTRYPOINT: These instructions are added as metadata and do not change the actual content of the image. They are often cached unless the previous steps have changed.

– Reuse or Rebuild Layers: Docker reuses cached layers if no changes are detected. If any instruction or context changes, Docker rebuilds that specific layer and any subsequent layers.

– Generate Final Image: Once all layers are processed, Docker assembles the image.

Example of Build Caching:

FROM node:16 WORKDIR /app COPY package.json . RUN npm install COPY . . CMD ["node", "app.js"]

- FROM node:16: Docker checks if the base image node:16 is already cached locally. If it is, Docker skips downloading it.

- WORKDIR /app: Creates the /app directory in the image if not cached.

- COPY package.json .: If package.json hasn’t changed since the last build, Docker reuses the cached layer.

- RUN npm install: If package.json is unchanged and the cache is valid, Docker skips the npm install step.

- COPY . .: If the application source code hasn’t changed, this layer is cached.

- CMD [“node”, “app.js”]: Since this is metadata, it’s typically cached unless the previous layers have changed.

Benefits of Build Caching:

- Faster Builds: By skipping unchanged instructions, Docker can significantly reduce build times.

- Resource Efficiency: Reusing cached layers saves CPU, memory, and network bandwidth.

- Incremental Updates: Only layers that have changed need to be rebuilt, making iterative development faster.

Managing the Build Cache:

–no-cache: You can force Docker to ignore the cache and rebuild all layers from scratch with the –no-cache flag:

docker build --no-cache -t myimage .

docker system prune: This command removes all unused data, including build caches, dangling images, and containers:

docker system prune

Multi-Stage Builds: Multi-stage builds help reduce the cache size by separating the build environment from the final image, ensuring that only necessary files are part of the cache.

Caching Limitations:

- Changes in Context: If files or directories copied into the image change, Docker rebuilds that layer and all subsequent ones.

- Non-deterministic Commands: Commands that produce different results each time (e.g., downloading a package from the internet) can invalidate the cache even if nothing else has changed.

Optimizing Caching in Docker Builds:

- Order Instructions Wisely: Place frequently changing instructions (like COPY . .) later in the Dockerfile to maximize cache reuse.

- Use .dockerignore: Exclude unnecessary files and directories from the build context using a

.dockerignorefile to reduce cache invalidation. - Pin Dependencies: Use versioned packages and pinned dependencies to avoid breaking the cache unnecessarily.

By using Docker’s build cache effectively, you can speed up builds, optimize resource usage, and improve your development and deployment workflows.

6. Conclusion

Congratulations! You have successfully created and deployed a simple application using Docker. By following this tutorial, you’ve learned how to:

- Write a Dockerfile to define your application’s environment.

- Build a Docker image from that Dockerfile.

- Run the application inside a Docker container.

- Deploy the container on any machine that supports Docker.

With Docker, you can package your applications and their dependencies into isolated, portable containers, ensuring consistency across different environments. This workflow simplifies the development, testing, and deployment process.