1. Introduction

Docker operates applications within containers, which often need to communicate with other applications. These external applications may also be in containers, or they may exist outside of Docker. As a result, Docker requires robust networking features. Fortunately, it offers solutions for both container-to-container communication and connections to external networks and VLANs. This capability is essential for containerized applications that need to interact with external services, including virtual machines (VMs) and physical servers.

Docker networking is built on an open-source, pluggable architecture known as the Container Network Model (CNM). The reference implementation of this model is provided by libnetwork, which delivers Docker’s fundamental networking functionalities. Network drivers can be plugged into libnetwork, enabling the creation of various network topologies to suit specific needs. libnetwork also offers built-in service discovery and basic container load balancing.

To ensure a seamless out-of-the-box experience, Docker comes with a set of built-in drivers that cover the most common networking needs. These include single-host bridge networks, multi-host overlay networks, and the ability to connect to existing VLANs. Additionally, ecosystem partners can enhance Docker’s capabilities by offering their own custom drivers.

2. Docker Networking

At the highest level, Docker networking consists of three key components:

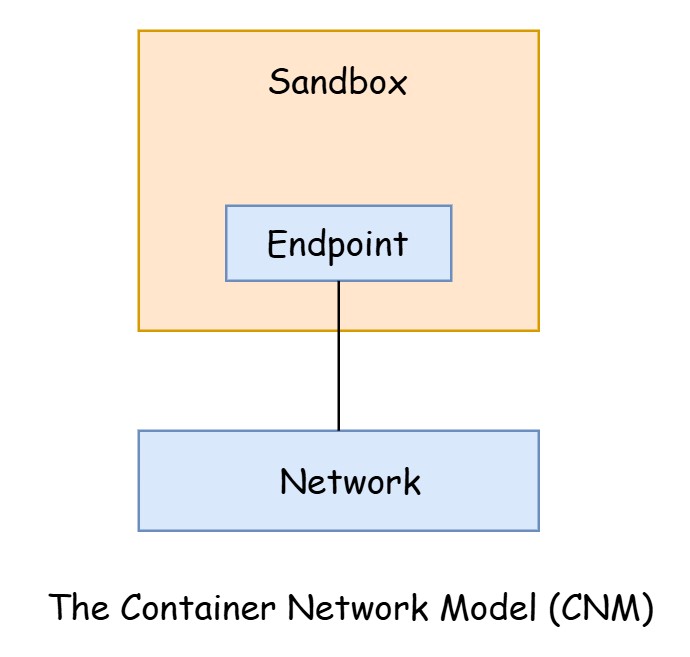

- The Container Network Model (CNM): The Container Network Model (CNM) is Docker’s open-source networking architecture that defines how container networking should work. It provides a standard framework for managing networking in containerized environments by separating responsibilities into three key elements: Network, Endpoint, and Sandbox.

- Libnetwork: Libnetwork is the practical implementation of the Container Network Model (CNM) in Docker. It provides the core networking functionalities required for container communication, enabling the creation and management of various network types, such as bridge networks and overlay networks. By serving as the reference implementation,

libnetworkallows developers to easily build upon and extend networking capabilities in Docker, facilitating container-to-container connectivity and integration with external networks. - Drivers: Drivers extend the model by implementing specific network topologies such as VXLAN overlay networks.

CNM is the design, libnetwork is the implementation and driver is the extension.

3. The Container Network Model (CNM)

CNM outlines the fundamental building blocks of a Docker network. At a high level, it defines three building blocks:

- Sandboxes: In the Container Network Model (CNM), a sandbox is an isolated network environment that provides each container with its own network stack, allowing it to operate independently of others. It includes Ethernet interfaces, ports, routing tables, and DNS config.

- Endpoints: In the Container Network Model (CNM), an endpoint is a crucial concept that represents the network interface for a container to connect to a network. It is a key element in enabling containers to communicate with each other or with external systems. Endpoints are virtual network interfaces.

- Networks: Networks function as a software-based implementation of a switch (802.1d bridge). They organize and isolate groups of endpoints that need to communicate with each other.

The following figure shows how container network model works:

Container A has one network interface (endpoint) and is linked to Network A. Container B, on the other hand, has two network interfaces (endpoints) and is connected to both Network A and Network B. Since both containers are connected to Network A, they can communicate with each other. However, the two endpoints in Container B cannot interact directly without the help of a layer 3 router.

It’s important to note that endpoints function like standard network adapters, meaning they can only connect to one network at a time. As a result, a container that needs to connect to multiple networks will require multiple endpoints.

Container A and Container B are running on the same host, their network stacks are completely isolated at the OS-level via the sandboxes and can only communicate via a network.

4. Libnetwork

Libnetwork is the canonical implementation of the Container Network Model. Libnetwork is an open-source, cross-platform project (supporting both Linux and Windows) that is part of the Moby project and is used by Docker for container networking. It provides the essential networking functionalities in Docker environments, allowing containers to communicate with each other and with external systems, while abstracting the complexities of different network technologies and configurations. In the early days of Docker, all the networking functionality was integrated directly into the Docker daemon.

Over time, the networking code was extracted and refactored into an external library known as libnetwork, which was built around the principles of the Container Network Model (CNM). Today, all core Docker networking logic is contained within libnetwork, allowing for more modular, flexible, and scalable network management within Docker.

Libnetwork also provides features such as native service discovery, ingress-based container load balancing, and manages both the network control plane and the management plane. These capabilities enhance container networking by enabling seamless communication between services, distributing traffic effectively, and ensuring efficient management and orchestration of network resources.

5. Drivers

Creation of networks, connectivity and isolation is handled by drivers. Docker comes with several built-in network drivers, referred to as native drivers or local drivers, which include bridge, overlay, and macvlan. These drivers support the most common network topologies used in containerized applications. Additionally, third-party developers can create their own network drivers to implement alternative network topologies and more advanced configurations, allowing for greater flexibility and customization in networking setups.

Every network in Docker is associated with a specific driver, which is responsible for creating and managing all resources within that network. This implies that the overlay driver is responsible for the creation, management, and deletion of all resources associated with that network.

libnetwork allows multiple network drivers to be active at the same time.