1. Introduction

The reason it is called the Transformer architecture is because the inputs go

through a series of “transformationsˮ.

2. What Does a Transformer Do?

A Transformer model performs several important tasks that enable it to understand and generate human-like language effectively:

- Focuses on Key Information:

Just like when you read a story, your attention naturally gravitates toward the most important details. Similarly, the transformer identifies and focuses on the most significant words in a sentence to better capture the main idea. - Understands the Whole Context at Once:

Unlike older models that process words one by one, the transformer examines all words in a sentence simultaneously. This global view helps it understand the overall context and the relationships between words, regardless of their position. - Evaluates Word Relationships:

The transformer calculates how strongly words are connected to each other. For instance, in a sentence about a “cat” and a “mouse,” it recognizes that these two words are closely related within the meaning of the sentence. - Builds a Complete Understanding:

By combining the attention weights and context information, the transformer constructs a comprehensive understanding of the sentence or passage, allowing it to interpret complex language structures accurately. - Predicts What Comes Next:

Using its understanding of context and relationships, the transformer can predict the next word or phrase. This predictive ability is what enables it to generate coherent and meaningful sentences, write stories, or complete tasks like answering questions.

3. Key Features of Transformer Architecture Explained

The Transformer model brings together several innovative mechanisms that make it highly effective for a wide range of tasks, especially in natural language processing. Below are the essential features that define how Transformers work and why they have become the foundation of modern AI systems:

1. Self-Attention Mechanism

At the core of the Transformer lies the self-attention mechanism, which enables the model to analyze relationships between all positions in an input sequence simultaneously. This means that every word in the sentence can directly interact with every other word, regardless of its position. Unlike older models that process information step by step or within a fixed range, self-attention allows the model to gain a complete view of the entire input in one go.

One of the most crucial features of the Transformer architecture is the self-attention mechanism. This technique allows every word in a sentence to take into account the complete context of the entire sequence, rather than being restricted to only the preceding words.

In simpler terms, it’s similar to how a person listens during a conversation — you might pay more attention to certain key parts while giving less focus to others, depending on what is most important. Similarly, self-attention enables the model to assign different levels of importance to each word in the sentence when interpreting meaning. This helps the Transformer develop a much richer and more accurate understanding of the input text.

2. Parallel Processing Capability

Thanks to the self-attention mechanism and the absence of sequential dependencies, Transformers can perform calculations for all positions in the sequence at the same time. This property is known as parallelization. It drastically improves the efficiency of both training and inference, making Transformers much faster than traditional models like RNNs, which process sequences word by word.

3. Capturing Long-Range Dependencies

Transformers are particularly strong at learning long-distance relationships within sequences. Since attention is not limited by distance, the model can connect tokens that are located far apart. This is a significant improvement over older models like RNNs, where distant word relationships are often lost or diluted.

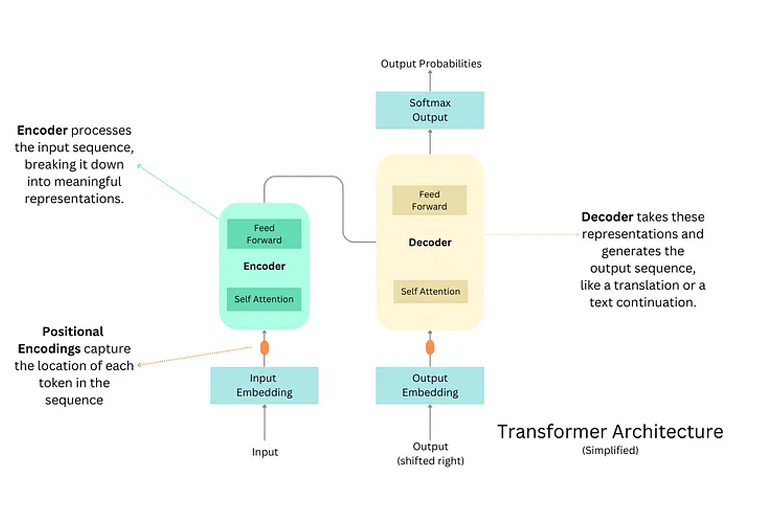

4. Positional Encoding

Because Transformers process tokens in parallel, they do not inherently know the order of tokens. To address this, they use positional encoding, which injects positional information into each word’s embedding. This helps the model distinguish between, for example, “the cat sat on the mat” and “the mat sat on the cat,” making the concept of word order clear during processing.

5. Encoder-Decoder Framework

The Transformer naturally supports an encoder-decoder architecture, where:

- The encoder processes the input sequence and encodes it into a high-level representation.

- The decoder takes this representation and produces the output sequence.

This design is especially useful for sequence-to-sequence tasks such as language translation, summarization, and text generation.

6. Transfer Learning in Transformers

One of the most powerful aspects of Transformers is their ability to benefit from transfer learning. Transformers are typically pre-trained on massive amounts of text data using self-supervised learning objectives like language modeling (predicting the next word) or masked language modeling (predicting masked words in a sentence). After pretraining, these models can be fine-tuned on specific downstream tasks with relatively little additional data, leading to excellent performance on a variety of applications.

4. Preprocessing in Transformers: Tokens, Embeddings, and Positional Encoding Explained

Before data is processed by a Transformer model, it goes through an essential preprocessing phase. This phase transforms raw text into numerical representations that the model can understand and process efficiently. Below are the key steps involved in this transformation process:

1. Tokenization: Converting Words into Numbers

The first step is tokenization, where every word or piece of text is converted into a unique token, represented by a numerical value.

- Purpose: This step translates natural language into a form that can be handled by mathematical models.

- Example:

- Text: “AI is powerful.”

- Tokens:

[101, 456, 789](numerical IDs corresponding to words).

2. Word Embedding: Adding Meaning to Tokens

Once words are converted into tokens, each token is mapped to a word embedding. A word embedding is a high-dimensional vector (or tensor) that captures the semantic meaning of a word.

- Embeddings reflect the relationship between words in the vocabulary.

- Words with similar meanings have similar embeddings.

- Internally, embeddings are represented as multi-dimensional tensors, which are like advanced arrays storing meaningful numerical data.

Example of Tensor Shapes:

- 1D Tensor (Vector): shape = (3,)

- 2D Tensor (Matrix): shape = (2, 3)

- 3D Tensor: shape = (2, 2, 3)

These tensor structures enable the model to perform complex calculations efficiently.

3. Positional Encoding: Adding Word Order Awareness

Since Transformers process all tokens at once (in parallel), they do not naturally understand the order of words. This is solved through Positional Encoding.

- What It Does: Adds positional information to each word embedding so the model knows the order of words in a sequence.

- How It Works: A unique positional vector is created for each word based on its position and is added to its word embedding.

Why This Matters:

Without positional encoding, the sentence:

- “The cat sat on the mat”

would be interpreted the same as - “The mat sat on the cat,”

which would be incorrect.

4. Mathematical Operations on Tensors in Transformers

Transformers rely heavily on tensor operations to manipulate and learn from data effectively. Here are the common tensor operations used during training and inference:

- Element-wise Operations:

Perform operations like addition, multiplication on each element of tensors individually. Example: emphasizing certain features by multiplying elements with specific weights. - Matrix Operations:

Core to neural networks, especially in linear transformations, activations, and weight updates. Example: matrix multiplication is used to apply learned transformations to input data. - Reduction Operations:

Aggregate information by calculating sums, averages, or other summaries over tensor dimensions. Example: computing the mean of attention scores. - Concatenation Operations:

Combine multiple tensors along specified axes. Example: merging outputs from multiple attention heads. - Broadcasting Operations:

Allow tensors of different shapes to be used together in operations by automatically expanding their dimensions as needed. - Element-wise Comparison Operations:

Used for tasks like classification where comparisons determine outputs, such as checking which class has the highest score.

5. Self Attention process

- Create Queries, Kets and Values

- Derive Attention Scores

- Calculate weighted sum

- Apply multiple attention heads

- Generate output (input for feed forward layer)

6. Self-Attention Layer in Transformers: A Simplified Explanation

The Self-Attention Layer is a central component in Transformer architecture, playing a crucial role in capturing relationships between words within a sentence or sequence. Its main goal is to determine the significance of each word by examining how it connects to every other word in the input, allowing the model to grasp the overall meaning of the sentence in context.

Purpose of Self-Attention

The primary objective of self-attention is to calculate the relevance of each individual word with respect to all the other words in the sequence. This enables the model to understand not just the word itself, but its contextual meaning based on surrounding words.

How Self-Attention Works: Creating Query, Key, and Value Vectors

To achieve this contextual understanding, the self-attention layer transforms each word into three different types of vectors:

- Query (Q): Focuses on the current word being processed.

- Key (K): Represents all words in the sequence from which relevant information may be retrieved.

- Value (V): Holds the actual content or information associated with each word that can be used if it is deemed relevant.

Example Breakdown:

- Suppose we have the sentence:

"Hi how are you?"- For the first word “Hi”, the model generates:

- Query (Q₁) → focuses on “Hi”

- Key (K), Value (V) → refer to all words: [“Hi”, “how”, “are”, “you”]

- The same process is repeated as attention shifts to the next word:

- Query (Q₂) → focuses on “how”; Keys/Values → [“Hi”, “how”, “are”, “you”]

- Query (Q₃) → focuses on “are”; Keys/Values → [“Hi”, “how”, “are”, “you”]

- Query (Q₄) → focuses on “you”; Keys/Values → [“Hi”, “how”, “are”, “you”]

- For the first word “Hi”, the model generates:

Linear Transformation of Word Embeddings

To prepare for the self-attention operation:

- The initial word representation (embedding plus positional encoding) undergoes a linear transformation.

- This transformation is a learned operation that projects high-dimensional vectors into a lower-dimensional space, producing the Q, K, and V vectors for every word.

Why It Matters:

This step enables the Transformer to efficiently calculate how much focus (attention) each word should give to others in the sentence, irrespective of their position.

Shifting Focus Mechanism

During processing, the Transformer systematically shifts focus across the sentence:

- When focusing on Query(Q₁) corresponding to the word “Hi”, it attends to Keys and Values from all other words (“how”, “are”, “you”).

- When moving to Query(Q₂) corresponding to “how”, the focus shifts, but the Keys and Values remain associated with the entire sentence.

- This shifting continues across the entire input, allowing the model to dynamically adjust its attention focus with each word.

7. Linear Projections

Linear projections are a type of linear transformation commonly used to reduce the dimensionality of matrices while preserving essential information. In the context of Transformers, linear projections help in extracting meaningful features from data, particularly word embeddings.

Word embeddings are dense, high-dimensional tensors that capture the semantic meaning of words. Before calculating attention scores, the Transformer architecture applies linear projections to these word embeddings. This process transforms the original high-dimensional embeddings into three lower-dimensional representations known as Query (Q), Key (K), and Value (V) vectors.

In simple terms, a “linear projection” means mapping a high-dimensional matrix into a lower-dimensional space, often represented as vectors (since vectors can be considered one-dimensional matrices).

Self-Attention Mechanism: Step-by-Step Explanation

- Linear Projections (Q, K, V):

The model linearly projects each input word embedding into three separate vectors—Query (Q), Key (K), and Value (V). This transformation enables the model to process the input efficiently in a reduced dimensional space. - Deriving Attention Scores:

The self-attention mechanism computes attention scores by measuring the similarity between the Query (Q) and Key (K) vectors. These scores determine the extent to which each word should focus on other words in the sequence. A higher attention score means greater relevance to the Query, while a lower score indicates lesser importance. - Computing the Weighted Sum:

Using the attention scores as weights, the self-attention layer calculates a weighted sum of the Value (V) vectors. Words with higher attention scores have a larger influence on the final representation. This step helps the model capture contextual relationships by blending information from all words in the sequence, centered around each Query word.

8. The Softmax function

Softmax is a function to convert the output of a model into probabilities, and

are used to calculate the “attention weightsˮ in the AI models.

8.1 Scaled Dot-Product Attention

After generating the Query (Q), Key (K), and Value (V) vectors through linear projections, these vectors are passed into the Scaled Dot-Product Attention layer. This layer performs a series of mathematical operations to compute attention scores and derive a context vector for each word.

How Scaled Dot-Product Attention Works:

- Dot Product Calculation:

The first step involves calculating the dot product between the Query (Q) vector of a word and the Key (K) vectors of all words in the sentence. This step measures the similarity between the word in focus (the Query) and every other word (the Keys), producing raw attention scores. - Scaling the Dot Product:

Since the dot product values can grow large with high-dimensional vectors, the raw attention scores are scaled down by dividing them by the square root of the dimension of the Key vectors (√dₖ). This scaling helps stabilize gradients during training and improves model performance. - Applying Softmax Function:

The scaled scores are then passed through a softmax function, which converts them into a normalized probability distribution ranging between 0 and 1. These probabilities represent how much “attention” the Query word should pay to each other word in the sentence. - Generating the Context Vector:

Finally, the model calculates a weighted sum of the Value (V) vectors using the attention probabilities as weights. This results in a context vector for each word, which is a refined representation that captures the most relevant information from the entire sentence for that specific word.

Purpose of Scaled Dot-Product Attention:

The main objective of this process is to determine the attention weight—how much each word should focus on the other words in the sentence. Words with higher attention scores have a greater influence on the final representation of the current word, allowing the model to understand context and meaning more effectively.

9. Multi-Head Attention: Learning from Multiple Perspectives

To deepen its understanding of context, the Transformer architecture employs multiple sets of Query (Q), Key (K), and Value (V) vectors, collectively known as attention heads. Instead of relying on a single attention calculation, the Transformer uses several attention heads that operate in parallel. Each head focuses on different parts or aspects of the input sequence, capturing a richer and more diverse representation of the data.

You can think of this process like a group discussion where multiple people are listening to different parts of the conversation—each person picks up on unique details that others might miss.

How Multi-Head Attention Works:

- Parallel Attention Heads:

Multiple Scaled Dot-Product Attention mechanisms run simultaneously. Each attention head processes the same input but with different sets of Q, K, and V vectors, enabling the model to capture multiple types of relationships and patterns in the data. - Example for Better Understanding:

Consider a simple sentence:

“Hi, how are you?”

Suppose we are focusing on the word “Hi” (Q vector).- One attention head may focus on the relation between “Hi” and “how” (K, V vectors),

- Another may focus on “Hi” and “are”,

- Another on “Hi” and “you”,

- All these comparisons happen in parallel, not sequentially.

- Combining the Results:

After the parallel attention heads compute their individual outputs, their results are concatenated into a single matrix. This combined output is then passed through a final linear transformation to produce a single context vector (for example, C₁) for each word. - Benefits of Multi-Head Attention:

- Parallel Processing: The simultaneous attention calculations significantly speed up computations, making Transformers much faster than older sequence-to-sequence models like LSTMs, which processed sequences step by step.

- Richer Understanding: By combining insights from multiple attention heads, the model can grasp more complex relationships and nuances in the data.

- Efficient Context Learning: The final output from the multi-head attention captures a comprehensive contextual meaning of each word based on its relationship with all other words in the sentence.

- 5. Generating Output and Passing to Feed-Forward Network (FFN): The output from the Multi-Head Attention layer contains contextualized embeddings for each word in the sentence, taking into account its relationship with every other word. This enhanced representation then serves as the input to the next component, the Feed-Forward Network (FFN).

Role of the Feed-Forward Network (FFN):

- The FFN applies additional non-linear transformations to each word’s representation individually.

- It helps in extracting deeper features from the data, potentially capturing finer details such as subtle word meanings, grammatical roles, and stylistic elements.

- The FFN also learns non-linear patterns that the self-attention mechanism alone might overlook, making the final representation more comprehensive and nuanced.

By combining Multi-Head Attention and the Feed-Forward Network, the Transformer is able to understand both contextual relationships and complex patterns, making it highly effective for various natural language processing tasks.

10. Feed-Forward Networks (FFN) in Transformers

The Feed-Forward Network (FFN) plays a crucial role in the Transformer architecture by enhancing the model’s ability to capture complex patterns and extract richer features from input sequences. While the self-attention mechanism focuses on relationships between words, the FFN works on each word individually, helping the model learn long-range dependencies, non-linear relationships, and subtle linguistic nuances.

Purpose of the Feed-Forward Network:

The main objectives of the FFN are:

- To extract additional features from each input representation.

- To uncover deeper meanings or hidden connections within the data that the self-attention layer may overlook.

- To introduce non-linearity, allowing the model to capture complex patterns and dependencies beyond simple linear transformations.

Step-by-Step Breakdown of the Feed-Forward Layer:

- First Linear Transformation (Projection):

- The process begins with a linear transformation, where the input vector is multiplied by a learnable weight matrix and a bias is added.

- This operation projects the input into a new (often higher-dimensional) space, enabling the model to apply richer transformations and extract more complex features.

- Non-Linear Activation Function:

- Next, a non-linear activation function is applied element-wise to the output of the linear transformation.

- Common choices include ReLU (Rectified Linear Unit), which introduces non-linearity, allowing the model to capture intricate patterns and relationships within the data.

- Second Linear Transformation (Refinement):

- After activation, a second linear transformation is performed using a different set of learnable weights and bias.

- This step refines the transformed data further, possibly altering its dimensionality to match the required shape for subsequent layers.

Additional Components to Improve Learning Stability:

- Residual Connections (Skip Connections):

- As neural networks get deeper, they may face issues like vanishing gradients or slow learning.

- Residual connections help alleviate these issues by allowing the input of a layer to bypass certain intermediate computations and directly combine with the output.

- This blending of original input (shortcut path) and transformed output enables smoother gradient flow and helps the network retain essential information while still learning new features.

- Layer Normalization:

- To ensure consistent and stable learning, the output of each layer is passed through layer normalization.

- This technique standardizes the output by adjusting its mean to 0 and variance to 1, making the learning process more efficient and helping the model adapt better to unseen data.

- Layer normalization also accelerates convergence and improves overall model robustness.

11. Decoder Process in Transformer Architecture

The Decoder in the Transformer architecture is responsible for generating the target sequence. It takes the encoded input from the Encoder and processes it through several layers to predict the next word in the sequence. Like the Encoder, the Decoder uses multiple sub-layers, but with additional mechanisms to handle autoregressive (sequential) generation.

Step-by-Step Breakdown of the Decoder Process

- Input Embedding and Positional Encoding:

- The Decoder starts by embedding the target sequence (the expected output during training) into continuous dense vectors.

- To retain information about the position of each word in the sequence, positional encoding is added to these embeddings, ensuring the model can capture the order of the words.

- Masked Multi-Head Self-Attention:

- The Decoder applies a masked self-attention mechanism, meaning each word can only attend to previous words and itself in the sequence.

- This masking prevents the model from accessing “future” words during training, preserving the autoregressive property, where predictions are made step-by-step.

- During inference (prediction time), masking ensures each prediction is based solely on past generated tokens.

- Residual Connection & Layer Normalization (Post Self-Attention):

- A residual connection is applied, adding the self-attention output back to its original input.

- This is followed by layer normalization, which stabilizes training and improves convergence.

- Multi-Head Attention over Encoder’s Output:

- The next attention layer is a multi-head attention mechanism that takes the Encoder’s output as keys (K) and values (V), and the output from the Decoder’s self-attention as queries (Q).

- This allows the Decoder to attend to relevant parts of the input sequence (from the Encoder) when generating each word in the output sequence, effectively learning alignment between input and output.

- Residual Connection & Layer Normalization (Post Encoder-Attention):

- Another residual connection is applied, combining the output of the Encoder-attention layer with its input.

- This is again followed by layer normalization to maintain stable training dynamics.

- Position-wise Feed-Forward Network (FFN):

- A Feed-Forward Network, similar to the Encoder’s, processes each position individually.

- It consists of two linear (dense) layers with a ReLU activation function in between.

- This layer helps to extract more complex features and introduce non-linearity to improve the expressiveness of the model.

- Residual Connection & Layer Normalization (Post FFN):

- The output from the Feed-Forward Network is added back to its input via a residual connection, followed by layer normalization.

- Stacking of Decoder Blocks:

- Multiple such Decoder blocks (each containing the above layers) are stacked on top of each other.

- The output of one Decoder block becomes the input to the next, allowing the model to progressively refine the representation of the target sequence.

- Final Linear Layer and Softmax Output:

- After passing through all Decoder blocks, the output is fed into a final linear layer, which projects the representation into the target vocabulary size.

- A softmax function is applied to convert these values into a probability distribution, representing the likelihood of each possible word in the vocabulary being the next word in the output sequence.