1. Introduction

At first, concurrency and parallelism may seem to be the same thing but actually these are different.

In Java programming, understanding the concepts of concurrency and parallelism is important for developing efficient and robust applications. Java with its libraries and features provide rich support to both concurrency and parallelism.

A simple example of concurrency vs parallelism:

Concurrency is: “Two queues accessing one ATM machine”

Parallelism is: “Two queues made from one initial queue and two queues and two ATM machines”.

Concurrency allows for execution of multiple tasks simultaneously in the same application. This is particularly helpful in performing I/O intensive operations as another task can be performed when one task is waiting for the I/O operation. This improves effective use of computational resources and improves application performance and responsiveness.

Parallelism is doing lot of things simultaneously.

2. Concurrency

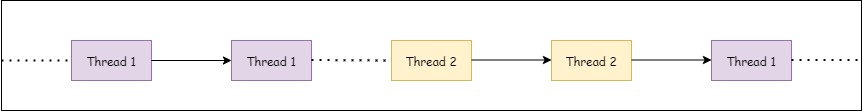

A CPU or a processor can work on only one task at a time. If there are multiple tasks, e.g. writing code and playing music, the processor switches between tasks. This switching between tasks is so fast and seamless, that it feels like multitasking.

Concurrency means executing multiple tasks at the same time but not necessarily simultaneously.

The processor in modern times are so fast that the pause and resume of tasks is done seamlessly giving us the feeling that the tasks are running parallel. However, these tasks are running concurrently. Two tasks can start, run, and complete in overlapping time periods. There are many ways to do concurrent programming. One of the commonly used is multi-threading.

Concurrency is achieved by the context switching. Concurrency is dealing with lot of things simultaneously. A condition that exists when at least two threads are making progress. A more generalized form of parallelism that can include time-slicing as a form of virtual parallelism.

3. Parallelism

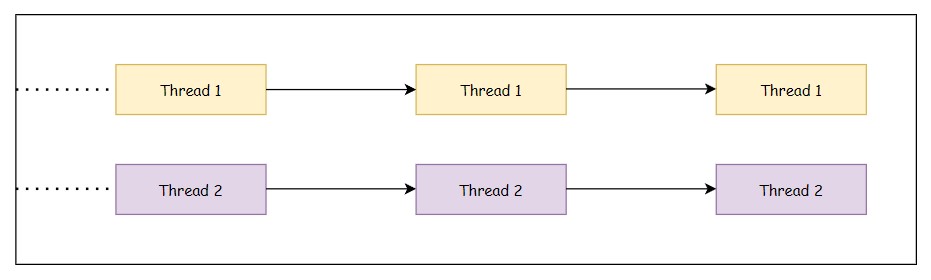

Earlier, we discussed concurrency with a single processor. However a machine can have multiple processors and most processors have multiple cores. In parallel computing, multiple tasks are executed at multiple processors and cores at the same time. Parallelism utilizes multiple cores of the CPU. In case of parallelism, multiple tasks are actually executed parallely on multiple cores.

Parallelism means that an application splits its task up into smaller subtasks which can be processed in parallel, for instance on multiple CPUs at the same time. So in parallelism, tasks are actually executed simultaneously.

An application can be both parallel and concurrent, which means that it processes multiple tasks concurrently in multi-core CPU at the same time. Parallelism improves the throughput and computational speed of the system by using multiple processors.

4. Key Concepts of concurrency in Java

- Threads: A thread is a thread of execution in program. A thread is a light weight sub-process. It is smallest unit of processing. A thread can be created by implementing the Runnable interface or by extending java.lang.Thread class.

- Thread safety: Common issues in thread-unsafe code include race conditions, deadlocks, and data corruption. Thread safety in java is the process to make our program safe to use in multithreaded environment. Thread safety is achieved by various mechanisms like synchronized methods and blocks, volatile and atomic variables. Java provides a lot of concurrency utilities, for example Executors, Concurrent Collections, Future and Callable, Locks.

5. Parallelism in Java

Java 8 provided a new way of processing collections in parallel manner by introducing parallel streams. A parallel stream divides the data into multiple segments, processing each segment in parallel. Other examples of parallelism in Java are Fork/Join Framework and CompletableFuture.

6. Concurrency and Parallelism Combinations

6.1 Concurrent, Not Parallel

An application can be concurrent, but not parallel. This indicates that the application tackles tasks sequentially, dividing each task into parallelizable subtasks. However, each task and its subtasks are finished before the subsequent task is divided and processed in parallel.

6.2 Neither Concurrent Nor Parallel

An application can be neither concurrent nor parallel. This means that it works on only one task at a time, and the task is never broken down into subtasks for parallel execution.

6.3 Concurrent and Parallel

Finally, an application can also be both concurrent and parallel in two ways:

- A simple parallel concurrent execution. This is the case when an application starts up multiple threads which are then executed on multiple CPUs.

- The second case is when the application works on multiple tasks concurrently, and also breaks each task down into subtasks for parallel execution. This may lead to improve in performance or may be degrade in performance as CPUs are already busy with concurrency or parallelism.

7. Conclusion

In conclusion, concurrency and parallelism are fundamental concepts in computer science, each offering unique benefits and challenges. Concurrency enables multiple tasks to make progress simultaneously, enhancing responsiveness and resource utilization. Parallelism, on the other hand, involves executing multiple tasks simultaneously to improve performance and efficiency. While they may seem similar, understanding the distinctions between concurrency and parallelism is crucial for designing efficient and scalable software systems. By leveraging the appropriate concurrency and parallelism techniques, developers can optimize the performance and responsiveness of their applications to meet the demands of modern computing environments.